Wallaroo and Ampere

Accelerate AI Inference by 7X

Business Need

Modern AI deployments require high performance, which often places a large strain on power consumption and cost per inference, making it difficult for businesses to manage TCO as the volume of AI inference workloads grows. Ampere Cloud Native Processors are an ideal solution for data center deployments, maximizing performance per rack and providing unparalleled scalability.

With Wallaroo’s commitment to providing its clients with a comprehensive platform to manage the deployment of AI workloads at scale, it was obvious that Ampere and Wallaroo could together bring the power of Wallaroo’s solutions to Ampere’s Arm-based architecture. The combined value of Ampere processors, further boosted by Ampere Optimized AI Frameworks, and the additional acceleration provided by Wallaroo, provides a dramatic increase in performance without an increase in operational costs.

Results

Testing of the performance of the ResNet50 computer vision model by the Wallaroo team on Dpsv5-series Azure VM instances of 64-core Ampere Altra showed clear performance advantages offered by Ampere CPUs, Wallaroo.ai, and the additional performance boost provided by the Ampere Optimized ONNXRuntime Framework.

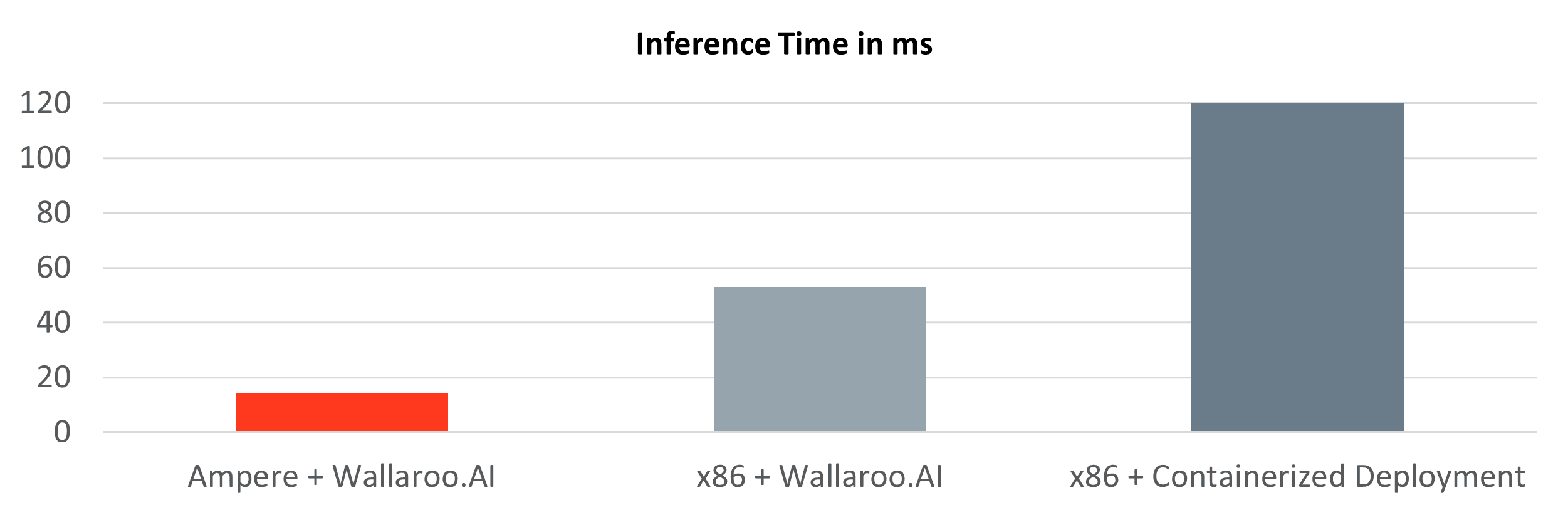

Testing Results:

- Wallaroo running on Ampere Altra 64-bit Dpsv5-series Azure VM + Ampere AI needs only 17ms per inference

- Wallaroo running on x86 needs 53 ms per inference

- Common containerized deployment on x86 (without Wallaroo) needs 120 ms per inference

The combined Ampere and Wallaroo.ai solution demonstrates a 7x increase in performance over x86 deployments, while simultaneously lowering the power to run complex ML use cases in production. Wallaroo’s integration with Ampere AI technology allows enterprises to scale production ML workloads on Arm instances, realizing faster ROI on their AI projects, requiring less power consumption, and delivering lower cost per inference.

“This breakthrough Wallaroo/Ampere solution allows enterprises to improve inference performance, increase energy efficiency, and balance their ML workloads across available compute resources much more effectively, all of which is critical to meeting the huge demand for AI computing resources today also while addressing the sustainability impact of the explosion in AI.“

-Vid Jain, Chief Executive Officer of Wallaroo.AI

Future Opportunities

Ampere AI provides a best-in-class solution for inference at scale further enhanced by partnerships such as Wallaroo. The customer can optimize their costs and invest savings into further development of their applications. With Ampere-based Dpsv5-series instances available on Microsoft Azure, this high-performance, cost-effective platform is accessible to all Azure customers, who can now benefit from the value of Ampere Cloud Native computing and Ampere AI acceleration, combined with further gains made through the fully integrated Wallaroo solution.

About Wallaroo.ai - About Ampere AI

About Wallaroo.AI

Wallaroo.AI provides a simple, secure, and scalable production capability that fits into your end-to-end workflow and runs where you need it to. Wallaroo.AI empowers enterprise AI teams to operationalize machine learning (ML) to drive positive outcomes with a unified software platform that enables deployment, observability, optimization and scalability of ML in the cloud, in decentralized networks, and at the edge.

About Ampere AI

Ampere AI provides Ampere® Optimized AI Frameworks on the Ampere Altra Family of Cloud-Native Processors. Ampere Optimized Tensorflow, Pytorch, and ONNXRNT deliver speeds up to 2-5X through AI framework and silicon side optimizations to Ampere’s ground-breaking processors. The free software is ready to use out-of-the-box, and no API changes or additional coding of any kind is required.

For More Information

Learn more about the full range of Ampere AI solutions at the Ampere AI Solutions Portal.

Get to know Wallaroo.ai and its MLOps solutions at Wallaroo.ai.

Footnotes

All data and information contained herein is for informational purposes only and Ampere reserves the right to change it without notice. This document may contain technical inaccuracies, omissions and typographical errors, and Ampere is under no obligation to update or correct this information. Ampere makes no representations or warranties of any kind, including but not limited to express or implied guarantees of noninfringement, merchantability, or fitness for a particular purpose, and assumes no liability of any kind. All information is provided “AS IS.” This document is not an offer or a binding commitment by Ampere. Use of the products contemplated herein requires the subsequent negotiation and execution of a definitive agreement or is subject to Ampere’s Terms and Conditions for the Sale of Goods.

System configurations, components, software versions, and testing environments that differ from those used in Ampere’s tests may result in different measurements than those obtained by Ampere.

©2022 Ampere Computing. All Rights Reserved. Ampere, Ampere Computing, Altra and the ‘A’ logo are all registered trademarks or trademarks of Ampere Computing. Arm is a registered trademark of Arm Limited (or its subsidiaries). All other product names used in this publication are for identification purposes only and may be trademarks of their respective companies.

Ampere Computing® / 4655 Great America Parkway, Suite 601 / Santa Clara, CA 95054 / amperecomputing.com