Deploy VOD PoC demo on a Single-Node Canonical MicroK8s

on Ampere Altra platform

NOTE: This tutorial guide contains 2 sections. The first section intends to explain how to deploy Canonical MicroK8s on Ampere Altra Platform. OpenEBS Mayastor will be installed for Block storage. The 2nd section will provide the instructions to deploy Video-on-Demand PoC demo on MicroK8s single-node cluster with Mayastor.

- Estimated time to complete this tutorial: 1 hour.

Overview

Ampere is pleased to showcase an open source Video-on-Demand (VOD) Service on Canonical MicroK8s, an open-source lightweight Kubernetes system for automating deployment, scaling, and management of containerized applications. This specific combination of Ampere Altra family processors and Canonical MicroK8s is ideal for video services, like VOD streaming, as it provides a scalable platform (from single node to high-availability multi-node production clusters) all while minimizing physical footprint.

We understand the need to support effective and scalable VOD streaming workloads for a variety of clients, such as video service providers and digital service providers. This customer base requires a consistent workload lifecycle with predictable performance to accommodate their rapidly growing audience across the web. Ampere Altra and Altra Max processors are based on a low-power Arm architecture which offers high core counts per socket and delivers more scalable and predictable performance - conditions which are ideal for power-sensitive edge locations as well as large-scale datacenters.

MicroK8s can run on edge devices as well as large scale HA deployments. A single node MicroK8s is also ideal for offline development, prototyping, and testing. The following are the main components for this VOD PoC demo on a single-node MicroK8s:

-

Canonical MicroK8s - a lightweight Kubernetes platform with full-stack automated operations.

-

Mayastor - an open-source, cloud-native storage software that provides scalable and performant block storage for containerized applications. It’s based on the native NVMe-oF Container-Attached-Storage engine of openEBS.

-

NGINX Ingress controller - a production‑grade Ingress controller that runs alongside NGINX Open Source instances in Kubernetes environments.

-

2 Pods -

-

nginx-vod-module-container - a NGINX VOD module container serving as the backend for video streaming service.

-

nginx-hello-container - a NGNIX web server container with HTML and resource files serving as a YouTube-like front-end web application with HTML5 Video players.

-

Prerequisites

-

Install Ubuntu 22.04 on Ampere Altra platform

-

A laptop or workstation configured as the Bastion node for deploying VOD PoC and preparing VOD demo files.

-

The following executable files are required:

-

kubectl version 1.24.10 or later

-

git

-

tar

-

docker or podman

-

homebrew for MacOS

-

dnsmasq

-

Deploy Canonical MicroK8s on Ampere Altra Platform

1.Access Ubuntu 22.04 server (Ampere Altra platform) and add its IP addresses into the hosts file under /etc for a bastion node (laptop or workstation).

$ echo "10.76.87.126 node1" | sudo tee -a /etc/hosts 2.Set the parameter vm.nr_hugepages (huge pages) to 1024 for enabling OpenEBS Mayastor on MicroK8s on Ampere Altra platform.

$ sudo sysctl vm.nr_hugepages=1024

$ echo 'vm.nr_hugepages=1024' | sudo tee -a /etc/sysctl.conf 3.There are 2 modules, nvme_fabrics and nvme_tcp required for Mayastor. Install the modules with the following command.

$ sudo apt install linux-modules-extra-$(uname -r)

4.Then enable the modules:

$ sudo modprobe nvme_tcp $ echo 'nvme-tcp' | sudo tee -a /etc/modules-load.d/microk8s-mayastor.conf

5.Reboot the system to make sure the changes are applied permanently.

$ sudo reboot

6.After the system has booted up, the MicroK8s stable releases containing v1.24/stable need to be verified.

$ snap info microk8s | grep stable | head -n8

7.Install MicroK8s on server.

$ sudo snap install microk8s --classic --channel=1.24/stable microk8s (1.24/stable) v1.24.10 from Canonical✓ installed

8.Check the instance 'microk8s is running', notice that there is no high-availability and the datastore is localhost.

$ sudo microk8s status --wait-ready | head -n4 microk8s is running high-availability: no datastore master nodes: 127.0.0.1:19001 datastore standby nodes: none

9.Add user to the microk8s group and update ~/.kube.

$ sudo usermod -a -G microk8s $USER $ sudo chown -f -R $USER ~/.kube

10.Create an alias for MicroK8s embedded kubectl. Simply call 'kubectl' after running 'newgrp microk8s'.

$ sudo snap alias microk8s.kubectl kubectl $ newgrp microk8s

11.To check the node status, run kubectl get node on server.

$ kubectl get node NAME STATUS ROLES AGE VERSION node1 Ready <none> 3d2h v1.24.10-2+123bfdfc196019

12.Verify that the cluster status is “running”.

$ microk8s status microk8s is running high-availability: no datastore master nodes: 127.0.0.1:19001 datastore standby nodes: none addons: enabled: ha-cluster # (core) Configure high availability on the current node disabled: community # (core) The community addons repository dashboard # (core) The Kubernetes dashboard dns # (core) CoreDNS helm # (core) Helm 2 - the package manager for Kubernetes helm3 # (core) Helm 3 - Kubernetes package manager ingress # (core) Ingress controller for external access host-access # (core) Allow Pods connecting to Host services smoothly hostpath-storage # (core) Storage class; allocates storage from host directory mayastor # (core) OpenEBS MayaStor metallb # (core) Loadbalancer for your Kubernetes cluster metrics-server # (core) K8s Metrics Server for API access to service metrics prometheus # (core) Prometheus operator for monitoring and logging rbac # (core) Role-Based Access Control for authorisation registry # (core) Private image registry exposed on localhost:32000 storage # (core) Alias to hostpath-storage add-on, deprecated

13.Enable add-ons dns, helm3, and ingress.

$ microk8s enable dns helm3 ingress

14.Enable add-on Mayastor with the local storage and set default pool size as 20GB.

$ microk8s enable core/mayastor --default-pool-size 20G

15.Check the status of the deployment on the mayastor namespace.

$ kubectl get all -n mayastor NAME READY STATUS RESTARTS AGE pod/mayastor-csi-ffzth 2/2 Running 8 (16m ago) 3d1h pod/etcd-operator-mayastor-869bd676c6-ddfnk 1/1 Running 4 (16m ago) 3d1h pod/etcd-m9gkcnc4x9 1/1 Running 4 (16m ago) 3d1h pod/core-agents-c9b664764-dlxqf 1/1 Running 4 (16m ago) 3d1h pod/msp-operator-6b67c55f48-dmrzp 1/1 Running 4 (16m ago) 3d1h pod/mayastor-h8p8r 1/1 Running 4 (16m ago) 3d1h pod/rest-75c66999bd-5btkd 1/1 Running 4 (16m ago) 3d1h pod/csi-controller-b5b975dc8-2srw8 3/3 Running 12 (16m ago) 3d1h NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/core ClusterIP None <none> 50051/TCP 3d1h service/rest ClusterIP 10.152.183.17 <none> 8080/TCP,8081/TCP 3d1h service/etcd-client ClusterIP 10.152.183.220 <none> 2379/TCP 3d1h service/etcd ClusterIP None <none> 2379/TCP,2380/TCP 3d1h NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE daemonset.apps/mayastor-csi 1 1 1 1 1 <none> 3d1h daemonset.apps/mayastor 1 1 1 1 1 <none> 3d1h NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/etcd-operator-mayastor 1/1 1 1 3d1h deployment.apps/core-agents 1/1 1 1 3d1h deployment.apps/msp-operator 1/1 1 1 3d1h deployment.apps/rest 1/1 1 1 3d1h deployment.apps/csi-controller 1/1 1 1 3d1h NAME DESIRED CURRENT READY AGE replicaset.apps/etcd-operator-mayastor-869bd676c6 1 1 1 3d1h replicaset.apps/core-agents-c9b664764 1 1 1 3d1h replicaset.apps/msp-operator-6b67c55f48 1 1 1 3d1h replicaset.apps/rest-75c66999bd 1 1 1 3d1h replicaset.apps/csi-controller-b5b975dc8 1 1 1 3d1h $ kubectl get pod -n mayastor -owide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES mayastor-csi-ffzth 2/2 Running 8 (17m ago) 3d1h 10.76.87.209 node1 <none> <none> etcd-operator-mayastor-869bd676c6-ddfnk 1/1 Running 4 (17m ago) 3d1h 10.1.29.209 node1 <none> <none> etcd-m9gkcnc4x9 1/1 Running 4 (17m ago) 3d1h 10.1.29.207 node1 <none> <none> core-agents-c9b664764-dlxqf 1/1 Running 4 (17m ago) 3d1h 10.1.29.217 node1 <none> <none> msp-operator-6b67c55f48-dmrzp 1/1 Running 4 (17m ago) 3d1h 10.1.29.203 node1 <none> <none> mayastor-h8p8r 1/1 Running 4 (17m ago) 3d1h 10.76.87.209 node1 <none> <none> rest-75c66999bd-5btkd 1/1 Running 4 (17m ago) 3d1h 10.1.29.221 node1 <none> <none> csi-controller-b5b975dc8-2srw8 3/3 Running 12 (17m ago) 3d1h 10.76.87.209 node1 <none> <none>

16.Edit DaemonSet NGINX ingress controller, change the address. (To update the IP address, replace the current value of 127.0.0.1 with the new address, 10.76.87.126)

$ kubectl edit ds -n ingress nginx-ingress-microk8s-controller ## the original --publish-status-address=127.0.0.1 ## replace 127.0.0.1 with the hosts' IP addresses --publish-status-address=10.76.87.126 daemonset.apps/nginx-ingress-microk8s-controller edited

17.Run 'microk8s status' to verify the add-on status.

$ microk8s status microk8s is running high-availability: no datastore master nodes: 127.0.0.1:19001 datastore standby nodes: none addons: enabled: dns # (core) CoreDNS ha-cluster # (core) Configure high availability on the current node helm3 # (core) Helm 3 - Kubernetes package manager ingress # (core) Ingress controller for external access mayastor # (core) OpenEBS MayaStor disabled: community # (core) The community addons repository dashboard # (core) The Kubernetes dashboard helm # (core) Helm 2 - the package manager for Kubernetes host-access # (core) Allow Pods connecting to Host services smoothly hostpath-storage # (core) Storage class; allocates storage from host directory metallb # (core) Loadbalancer for your Kubernetes cluster metrics-server # (core) K8s Metrics Server for API access to service metrics prometheus # (core) Prometheus operator for monitoring and logging rbac # (core) Role-Based Access Control for authorisation registry # (core) Private image registry exposed on localhost:32000 storage # (core) Alias to hostpath-storage add-on, deprecated

18.Check StorageClass.

$ kubectl get sc NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE mayastor-3 io.openebs.csi-mayastor Delete WaitForFirstConsumer false 3d1h mayastor io.openebs.csi-mayastor Delete WaitForFirstConsumer false 3d1h

19.Check Mayastor pool information from the cluster.

$ kubectl get mayastorpool -n mayastor NAME NODE STATUS CAPACITY USED AVAILABLE microk8s-node1-pool node1 Online 21449670656 0 21449670656

20.Clean up the target storage devices on the node

$ sudo su - root@node1:~# umount /dev/nvme1n1 /dev/nvme2n1 for DISK in "/dev/nvme1n1" "/dev/nvme2n1" ; do echo $DISK && \ sgdisk --zap-all $DISK && \ dd if=/dev/zero of="$DISK" bs=1M count=100 oflag=direct,dsync && \ blkdiscard $DISK done

21.Add the 2nd drive, /dev/nvme1n1 on each node to be a Mayastor pool.

$ sudo snap run --shell microk8s -c ' $SNAP_COMMON/addons/core/addons/mayastor/pools.py add --node node1 --device /dev/nvme1n1' mayastorpool.openebs.io/pool-node1-nvme1n1 created

22.Check Mayastor pool information.

$ kubectl get mayastorpool -n mayastor NAME NODE STATUS CAPACITY USED AVAILABLE microk8s-node4-pool node1 Online 21449670656 0 21449670656 pool-node1-nvme1n1 node1 Online 999225819136 0 999225819136

23.Generate a YAML file, test-pod-with-pvc.yaml, to create Persistent Volume Claim (PVC) for a NGINX container.

$ cat << EOF > test-pod-with-pvc.yaml --- apiVersion: v1 kind: PersistentVolumeClaim metadata: name: test-pvc spec: storageClassName: mayastor accessModes: [ReadWriteOnce] resources: { requests: { storage: 5Gi } } --- apiVersion: v1 kind: Pod metadata: name: test-nginx spec: volumes: - name: pvc persistentVolumeClaim: claimName: test-pvc containers: - name: nginx image: nginx ports: - containerPort: 80 volumeMounts: - name: pvc mountPath: /usr/share/nginx/html EOF $ kubectl apply -f test-pod-with-pvc.yaml persistentvolumeclaim/test-pvc created pod/test-nginx created $ kubectl get pvc,pod NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE persistentvolumeclaim/test-pvc Bound pvc-67e1d137-b79f-4098-9a07-394600622b9c 5Gi RWO mayastor 2m36s NAME READY STATUS RESTARTS AGE pod/test-nginx 1/1 Running 0 2m36s

24.Verify the PVC being bound with NGINX container inside the pod.

$ kubectl exec -it pod/test-nginx -- sh # cd /usr/share/nginx/html # ls -al total 24 drwxr-xr-x 3 root root 4096 Apr 18 06:44 . drwxr-xr-x 3 root root 4096 Apr 12 04:42 .. drwx------ 2 root root 16384 Apr 18 06:44 lost+found # mount | grep nginx /dev/nvme4n1 on /usr/share/nginx/html type ext4 (rw,relatime,stripe=32) # lsblk | grep nvme4n1 nvme4n1 259:7 0 5G 0 disk /usr/share/nginx/html # exit

25.Delete the PVC and NGINX container.

$ kubectl delete -f test-pod-with-pvc.yaml

26.If you prefer to use kubectl command on a local machine instead of the server, running the following command will output the kubeconfig file from MicroK8s.

% ssh ampere@node1 microk8s config > ~/.kube/config % mkdir ~/.kube % scp node1/kubeconfig ~/.kube/config % export KUBECONFIG=~/.kube/config % kubectl get nodes

Deploying the Demo on MicroK8s Single Node

1.Access the target MicroK8s cluster.

% kubectl get nodes -owide NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME node1 Ready <none> 3d3h v1.24.10-2+123bfdfc196019 10.76.87.209 <none> Ubuntu 22.04.1 LTS 5.15.0-60-generic containerd://1.5.13

2.Create a namespace as vod-poc.

% kubectl create ns vod-poc

3.Obtain the source code from the 2 GitHub repos.

% git clone https://github.com/AmpereComputing/nginx-hello-container % git clone https://github.com/AmpereComputing/nginx-vod-module-container

- There are two yaml files for deploying VOD PoC:

- nginx-hello-container/MicroK8s/nginx-front-app.yaml

- nginx-vod-module-container/MicroK8s/nginx-vod-app.yaml

4.Deploy NGINX web server container in StatefulSet with its PVC template, service, and ingress. After editing the host in these two YAML files (nginx-front-app.yaml and nginx-vod-app.yaml) to match the records in dnamasq server. The replicas field can be changed to 1 in the YAML file for deploying one pod. The replicas field in this file is set to 3:

% kubectl -n vod-poc create -f nginx-hello-container/MicroK8s/nginx-front-app.yaml

5.Deploy NGINX VOD module container in StatefulSet with its PVC template, service, and ingress.

% kubectl -n vod-poc create -f nginx-vod-module-container/MicroK8s/nginx-vod-app.yaml

6.Check the deployment status in vod-poc namespace.

% kubectl -n vod-poc get pod,pvc –owide % kubectl -n vod-poc get svc,ingress -owide

7.Prepare vod-demo.tgz (for video files) and nginx-front-demo.tgz (for front-end web presentation) first for the VOD demo:

-

For preparing html and resource files in nginx-front-demo.tgz, there are the HTML file template (index.html) and resources (JavaScript, CSS files) on GitHub, https://github.com/AmpereComputing/nginx-hello-container/.

There are two example HTML files, hls.html & dash.html, under /examples/html folder on the GitHub repo, https://github.com/AmpereComputing/nginx-vod-module-container/, as examples HTML5 video players for HLS and MPEG-DASH -

For preparing videos for vod-demo.tgz, there is a shell script, transcoder.sh, under /examples/bin on the GitHub, https://github.com/AmpereComputing/nginx-vod-module-container/, to transcode and prepare videos.

-

Once those files are ready, use the commands below to compress them into 2 TGZ files

% tar zcvf vod-demo.tgz vod-demo % tar zcvf nginx-front-demo.tgz nginx-front-demo

8.For deploying the video files to the container in the nginx-vod-app pod, a web server will be required to host those video and subtitle files.

Create a sub-directory such as "nginx-html", then move vod-demo.tgz and nginx-front-demo.tgz to the directory of "nginx-html". Run the NGINX web server container with the directory.

% mkdir nginx-html % mv vod-demo.tgz nginx-html % mv nginx-front-demo.tgz nginx-html % cd nginx-html % docker run -d --rm -p 8080:8080 --name nginx-html --user 1001 -v $PWD:/usr/share/nginx/html docker.io/mrdojojo/nginx-hello-app:1.1-arm64

9.Deploying those bundle files to VOD pods.

- Access the container in vod-poc pod by running the commands below and download files with wget from the web server from Bastion node to the pod, nginx-front-app-0.

- Download files for front-end from nginx-front-demo.tgz

% kubectl -n vod-poc exec -it pod/nginx-front-app-0 -- sh / # cd /usr/share/nginx/html/ /usr/share/nginx/html # wget http://[Bastion node's IP address]:8080/nginx-front-demo.tgz /usr/share/nginx/html # tar zxvf nginx-front-demo.tgz /usr/share/nginx/html # sed -i "s,http://\[vod-demo\]/,http://vod.microk8s.ampere/,g" *.html /usr/share/nginx/html # rm -rf nginx-front-demo.tgz

Repeat for other 2 pods, nginx-front-app-1 and nginx-front-app-2

- Download the file, vod-demo.tgz, for video and subtitle files (mp4 & vtt) with wget from the web server from Bastion node to the pod, nginx-vod-app-0.

% kubectl -n vod-poc exec -it pod/nginx-vod-app-0 -- sh / # cd /opt/static/videos/ /opt/static/videos # wget http://[Bastion node's IP address]:8080/vod-demo.tgz /opt/static/videos # tar zxvf vod-demo.tgz /opt/static/videos # mv vod-demo/* . /opt/static/videos # rm -rf vod-demo

Repeat for other 2 pods, nginx-vod-app-1 and nginx-vod-app-2

10.Get the domain name A records publish to DNS server

10.76.87.126 demo.microk8s.hhii.ampere A 10min 10.76.87.126 vod.microk8s.hhii.ampere A 10min

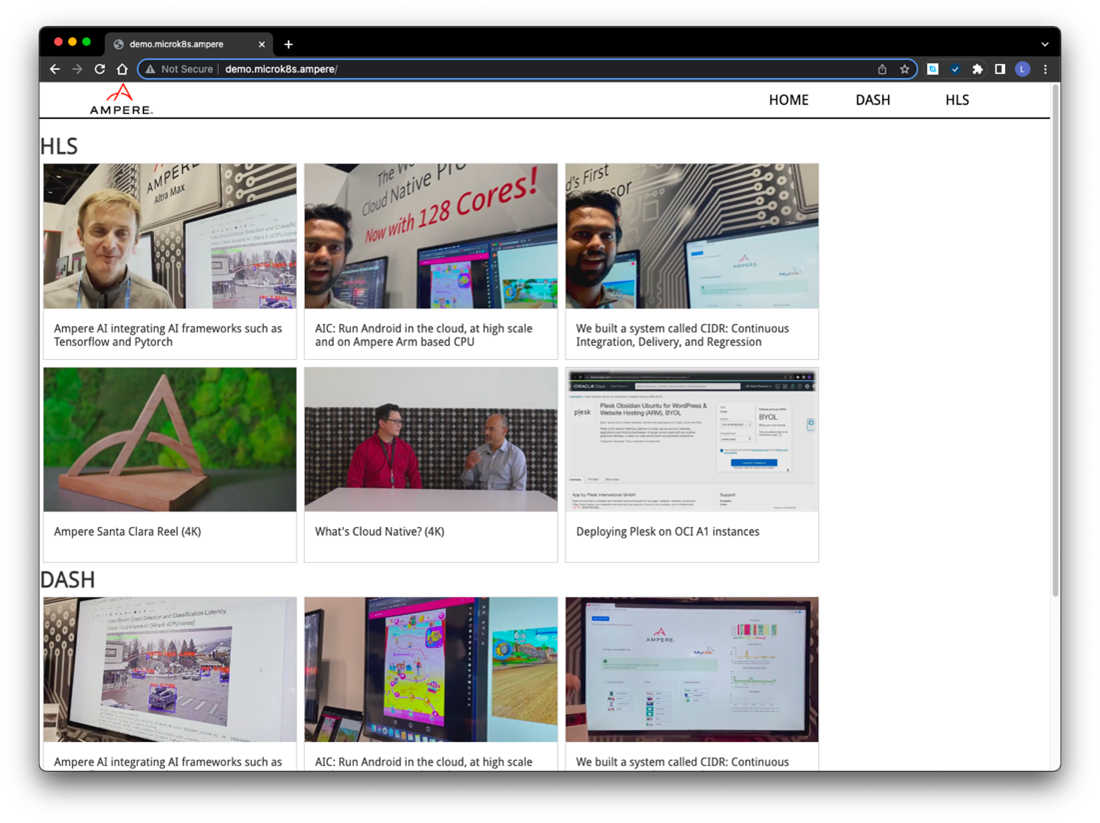

11.Finally, open a browser to access the http://demo.microk8s.ampere for testing the VOD PoC demo on MicroK8s

Footnotes

All data and information contained herein is for informational purposes only and Ampere reserves the right to change it without notice. This document may contain technical inaccuracies, omissions and typographical errors, and Ampere is under no obligation to update or correct this information. Ampere makes no representations or warranties of any kind, including but not limited to express or implied guarantees of noninfringement, merchantability, or fitness for a particular purpose, and assumes no liability of any kind. All information is provided “AS IS.” This document is not an offer or a binding commitment by Ampere. Use of the products contemplated herein requires the subsequent negotiation and execution of a definitive agreement or is subject to Ampere’s Terms and Conditions for the Sale of Goods.

System configurations, components, software versions, and testing environments that differ from those used in Ampere’s tests may result in different measurements than those obtained by Ampere.

©2023 Ampere Computing. All Rights Reserved. Ampere, Ampere Computing, Altra and the ‘A’ logo are all registered trademarks or trademarks of Ampere Computing. Arm is a registered trademark of Arm Limited (or its subsidiaries). All other product names used in this publication are for identification purposes only and may be trademarks of their respective companies.

Ampere Computing® / 4655 Great America Parkway, Suite 601 / Santa Clara, CA 95054 / amperecomputing.com